WHAT IS CYBER SECURITY ?

Cyber security is the state or process of protecting and recovering networks, devices and programs from any type of cyberattack or unauthorized access

The main purpose of cyber security is to protect all the organizational assets from both external and internal threats as well as disruptions caused due to natural disasters.

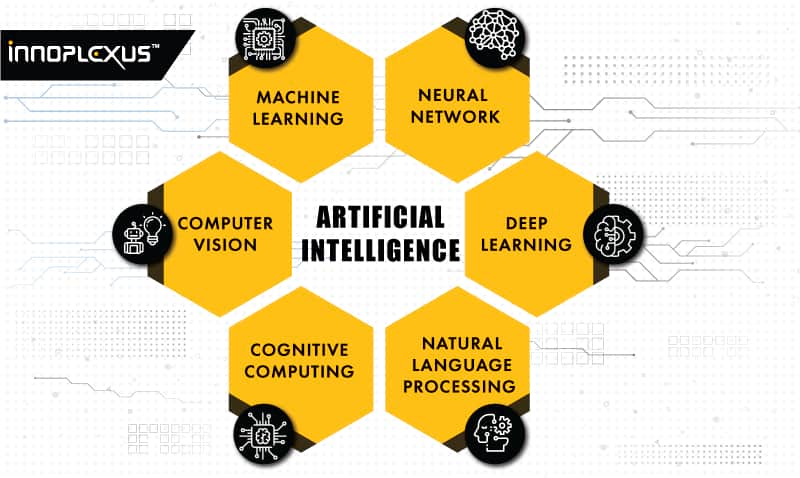

As organizational assets are made up of multiple disparate systems, an effective and efficient cyber security posture requires coordinated efforts across all its information systems. Therefore, cyber security is composed of the following sub-domains:

Network Security protects network traffic by controlling incoming and outgoing connections to prevent threats from entering or spreading throughout the network.

Data Loss Prevention (DLP) protects data by focusing on the location, classification and monitoring information at rest, in use and in motion.

Intrusion Detection Systems (IDS) or Intrusion Prevention Systems (IPS) work to identify potentially dangerous cyber activity.

Identity and Access Management (IAM) uses authentication services to limit and track employee access to protect internal systems from malicious entities.

Encryption is the process of encoding data to render it unintelligible, and is often used during data transfer to prevent theft in transit.

Antivirus/anti-malware solutions scan computer systems for known threats. Modern solutions are able to detect previously unknown threats based on their behavior.

Application Security

Application security implements various defenses within all software and services used within an organization against a wide range of threats. It requires designing secure application architectures, writing secure code, implementing strong data input validation, threat modeling, etc. to minimize the likelihood of any unauthorized access or modification of application resources.

Mobile Security

Mobile security refers to protecting both organizational and personal information stored on mobile devices like cell phones, laptops, tablets, etc. from various threats such as unauthorized access, device loss , malware, etc.

Cloud Security

Cloud security relates to designing secure cloud architectures and applications for organization using various cloud service providers such as AWS, Google, Azure, Rackspace, etc. Effective architecture and environment configuration ensures protection against many threats.

Disaster recovery and business continuity planning (DR&BC)

DR&BC deals with processes, monitoring, alerts and plans that help organizations prepare to keep business critical systems online during and after any kind of a disaster as well as resuming lost operations and systems after an incident.

User education

Training individuals regarding topics on computer security is essential in raising awareness about industry best practices, organizational procedures and policies as well as monitoring and reporting malicious activities.

Common types of cyber threats

Malicious software such as computer viruses, spyware, Trojan horses, and keyloggers.

The practice of obtaining sensitive information (e.g., passwords, credit card information) through a disguised email, phone call, text message.

The psychological manipulation of individuals to obtain confidential information; often overlaps with phishing.

Advanced Persistent Threat

An attack in which an unauthorized user gains access to a system or network and remains there for a period of time without being detected.

WHAT IS SECURITY BREACH ?

A security breach occurs when an intruder gains unauthorized access to an organization’s protected systems and data. Cyber criminals or malicious applications bypass security mechanisms to reach restricted areas. A security breach is an early-stage violation that can lead to things like system damage and also data loss.

11 top cyber security best practices to prevent a breach

1. Conduct cyber security training and awareness

A strong cyber security strategy would not be successful if the employees are not educated on topics of cyber security, company policies and incidence reporting. Even the best technical defenses may fall apart when employees make unintentional or intentional malicious actions resulting in a costly security breach. Educating employees and raising awareness of company policies and security best practices through seminars, classes, online courses is the best way to reduce negligence and security violation.

2. Perform risk assessments

Organizations should perform a formal risk assessment to identify all valuable data and prioritize them based on the impact caused by an asset when its compromised. This will help organizations decide how to best spend their resources on securing each valuable asset.

3. Ensure vulnerability management and software patch management/updates

It is crucial for organizational IT teams to perform identification, classification, remediation, and mitigation of vulnerabilities within all software and networks that it uses, to reduce threats against their IT systems. Furthermore, security researchers and attackers identify new vulnerabilities within various software every now and then which are reported back to the software vendors or released to the public. These vulnerabilities are often exploited by malware and cyber attackers. Software vendors periodically release updates which patch and mitigate these vulnerabilities. Therefore, keeping IT systems up-to-date helps to protect organizational assets.

4. Use the principle of least privilege

The principle of least privilege states that both software and personnel should be allotted the least amount of permissions necessary to perform their duties. This helps limits the damage of a successful security breach as user accounts/software having lower permissions would not be able to impact valuable assets that require a higher-level set of permissions. Also, two-factor authentication should be used for all high-level user accounts that have unrestricted permissions.

5. Enforce secure password storage and policies

Organizations should enforce the use of strong passwords that adhere to industry recommended standards for all employees. They should also be forced to be periodically changed to help protect from compromised passwords. Furthermore, password storage should follow industry best practices of using salts and strong hashing algorithms.

6. Implement a robust business continuity and incidence response (BC-IR) plan

Having a solid BC-IR plans and policies in place will help an organization effectively respond to cyber-attacks and security breaches while ensuring critical business systems remain online.

7. Perform periodic security reviews

Having all software and networks go through periodic security reviews helps in identifying security issues early on and in a safe environment. Security reviews include application and network penetration testing, source code reviews, architecture design reviews, red team assessments, etc. Once security vulnerabilities are found, organizations should prioritize and mitigate them as soon as possible.

8. Backup data

Backing up all data periodically will increase redundancy and will make sure all sensitive data is not lost after a security breach. Attacks such as injections and ransomware, compromise the integrity and availability of data. Backups can help protect in such cases.

9. Use encryption for data at rest and in transit

All sensitive information should be stored and transferred using strong encryption algorithms. Encrypting data ensures confidentiality. Effective key management and rotation policies should also be put in place. All web applications or software should employ the use of SSL/TLS.

10. Design software and networks with security in mind

When creating applications or writing software or architecting networks, always design them with security in place. Bear in mind that the cost of refactoring software and adding security measures later on is far greater than building in security from the start. Security designed application help reduce the threats and ensure that when software/networks fail, they fail safe.

11. Implement strong input validation and industry standards in secure coding

Strong input validation is often the first line of defense against various types of cyber attacks. Software and applications are designed to accept user input which opens it up to attacks and here is where strong input validation helps filter out malicious input payloads that the application would process. Furthermore, secure coding standards should be used when writing software as these helps avoid most of the prevalent vulnerabilities outlined in OWASP and CVE.